Originally published on Hewlett Packard Enterprise.

New interest in supercomputers is being spurred by capabilities brought to light in the race to find a cure for COVID-19. Medicine, finance, logistics, and beyond will benefit.

“It’s tough to make predictions, especially about the future,” is a famous pronouncement attributed to baseball great Yogi Berra. Financial services professionals are no strangers to that challenge. For them, predicting the future—or trying to—is a central task, requiring the utmost in computing power.

“In financial services, predicting the future value of a particular asset may take into account factors as varied as economies, weather, and sentiments,” says Dr. Eng Lim Goh, chief technology officer, AI, at Hewlett Packard Enterprise. According to Goh, financial wizards need supercomputer power to analyze the large number of variables that come into play as they seek to optimize their portfolios relative to global risk.

Although financial services firms have been using powerful computers for decades, new interest in supercomputers is being spurred by capabilities brought to light in the frenzied race to find a cure for COVID-19. For example, high-dimensional data, which is found in the study of gene expression, is also prevalent, although not fully leveraged, in financial services, says Goh. Such high-dimensional data has many more variables or columns than there are samples or rows—sometimes tens of thousands. “After having recently done analytics and machine learning on such challenging datasets, from inpatients as well as clinical trial volunteers, we can now bring that know-how to advance the financial industry,” Goh explains.

HPC systems are making inroads in other mainstream fields as well. In logistics, for example, route optimization, once considered ideally suited to quantum computers, is critical. However, HPC systems can simulate the work of quantum machines, eliminating the need to use expensive and experimental quantum technology, says Steve Conway, senior vice president of research at Hyperion Research.

The power of the swarm

An artificial intelligence methodology coined swarm learning by Goh is applicable to both COVID-19 research and the processing of mainstream healthcare data. Swarm learning pools the resources of geographically dispersed HPC systems to gain better insights. “If one hospital in Holland has data on hundreds of patients, that’s not enough for some machine learning algorithm training. But if that data could be leveraged with data from hospitals in other countries, such as Germany and the U.K., you would have enough for machine learning,” Goh explains. “Swarm learning enables this by sharing only neural network weights, without sharing any patient data, thus overcoming privacy issues.” The innovation was conceived of by Krishnaprasad Shastry, distinguished technologist at HPE’s Bangalore research lab, and his team, which interestingly uses a blockchain to enable secure and transparent coordination among these peer HPC nodes.

“You can apply swarm learning to other industries, such as oil and gas, manufacturing, credit, and advertising, where you want to share learnings with other groups without having to share your data,” says Goh.

HPC and AI in medicine

In medical research, the speed with which supercomputers perform complex calculations can significantly shorten time to discovery across multiple types of work, including:

- DNA sequencing: A thorough understanding of a viral genome enables researchers to narrow their search for drugs that can bind with the specific viral proteins.

- Gene expression: RNA-Seq is a sequencing technique that enables researchers to study the tens of thousands of data points that come into play when a body reacts to a virus. Gene expression frequently includes high-dimensional data.

- Imaging: Cryogenic electron microscopy (cryo-EM) helps researchers visualize the structure of viruses as small as 100 nanometers.

- Models in 3D: High-fidelity and high-resolution models of the various proteins that make up a virus enable scientists to perform simulation exercises in their search for drugs to bind with them.

- Drug research: The PharML.Bind drug discovery framework incorporates neural network and deep learning technologies to help researchers predict compound affinity for protein structures.

- Vaccine research: AI-enabled modeling and simulation streamline clinical trials as data scientists search for effective vaccines.

While the movement of HPC from scientific labs to mainstream industry use has been underway for several years, there is no doubt the trend has been accelerated by the use of HPC systems in COVID-19 research, according to Mike Woodacre, chief technology officer for HPC and Mission Critical Systems at HPE. “In the case of COVID-19, it really is a matter of life and death how quickly we can come up with treatments. People are pushing the boundaries to use every technique possible to accelerate time to solution, just like the [NASA] moon-shot program,” he says. “Afterwards, you can step back and apply similar methodologies to other areas as we emerge from the pandemic.”

Supercomputing in the cloud: HPC-as-a-Service

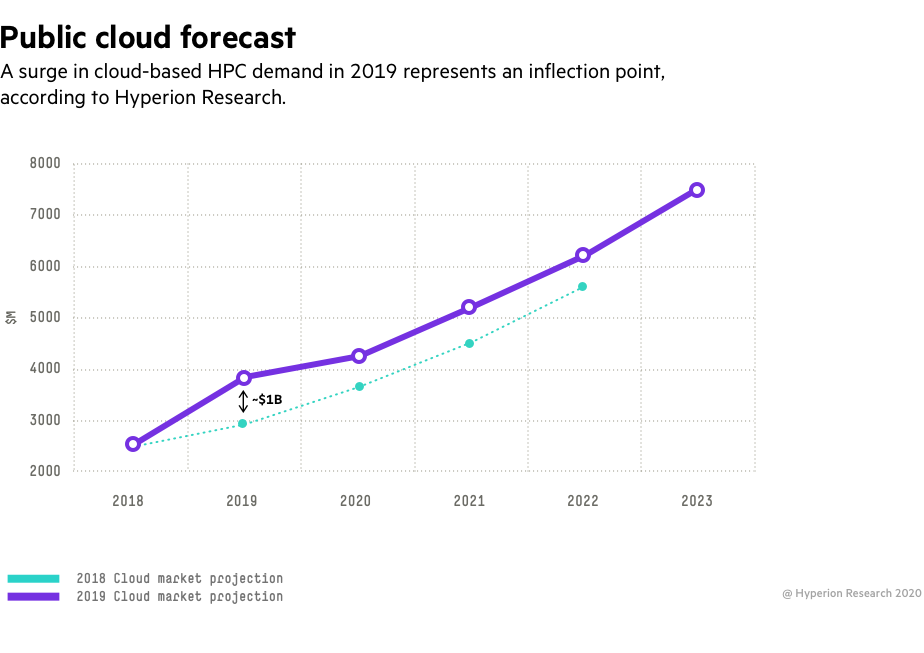

As mainstream organizations discover the power of supercomputing, many are gravitating to the cloud for some of their workloads. They’re finding cloud services to be of significant value, with benefits such as fast deployment, the ability to handle sudden spikes in demand, and a pay-per-use model (an operating expense) versus an on-premises ownership model (a capital expense). According to Hyperion, 20 percent of all HPC jobs were done in cloud services in 2019, a slice of the market that is projected to increase at a compound annual growth rate of 16.8 percent between 2019 and 2024. And Hyperion anticipates new HPC demand to combat COVID-19 may cause public cloud computing for HPC workloads to grow even faster. Hybrid Cloud-as-a-Service is a third model of note, but that’s a topic for another article.

“All the big American cloud services providers have gotten into [HPC-as-a-Service],” says Conway. “Most customers don’t have enough resources on premises to do everything they’d like to do, so the surge or overload is going to cloud providers—sometimes two or three times as much work as they can afford to do on-premises. Some companies have a big project one or two times a year. It doesn’t make sense to have a supercomputer on-prem for that.”

Lessons learned, lessons applied

As HPC enters the mainstream, IT professionals are learning more about the once arcane systems, including the value of tuning and optimization. “If the application is available and tuned, it can take on the work quickly,” says Goh. If the application is not available or tuned for a supercomputer, a specialist might have to port the application and tune it. Although the work is time consuming upfront, the effort is essential for supercomputers to deliver the rapid processing speeds they are capable of.

In a typical case, an engineer might try out a new application on a laptop. There might be 100 different scenarios to try, and if each run takes several days, completing the work could take the better part of a year. “One hundred scenarios at two to three days apiece would just take too long,” says Goh. However, he explains, “if that application has been parallelized and tuned for a supercomputer, the same work might only take a week to run.”

Getting started

For companies to get started with supercomputing, a good approach is to seek out scientific computer centers that are doing work that is like what they have in mind, Woodacre notes. “The key is to look around and find people who are doing similar research and learn from their HPC techniques,” he says. “At many HPC centers—the University of Pittsburgh Supercomputer Center is one example—you have a great concentration of knowledge, HPC systems, large-memory machines, and in-house experts to steer people to the right tools.”

Meanwhile, the profile of HPC systems in the mainstream is steadily increasing, according to Goh. “When a new application is taking too long, there is an appreciation of the need to put supercomputer power behind it—that HPC speeds up time,” he says.

And speeding up time is one way to bring the future closer, making it easier to overcome Yogi Berra’s legendary conundrum.

At a glance:

- Stay informed: Supercomputers might seem arcane, but paying attention to the major research initiatives will keep you current on the state of supercomputing work.

- Compare: Match the HPC needs of your own business to advances that are being made on the leading edges of research, including COVID-19.

- Consider the cloud: HPC-as-a-Service is a great way to affordably tap into supercomputing power as needed.

- Optimize, optimize: Your applications will likely need to be ported, parallelized, and tuned to run on a supercomputer. Apply upfront expertise to maximize processing speed.